Experiment: LeNet-1 Results

CV

CNN

LeNet

Experiment Log: Comparing current findings with original paper

Hypothesis

The current architecture should produce the same results as the original paper.

Link to Code: View Full Notebook & Procedure

Experimental Setup

- Model/Architecture: LeNet-1

- Dataset: MNIST

- Preprocessing: normalization, resizing (28x28)

- Hyperparameters:

- Learning rate: 0.01

- Batch size: 32

- Epochs: 20

- Loss Function: Cross Entropy Loss

- Optimizer: SGD (Momentum=0.9)

- Learning rate: 0.01

Procedure

- Used PyTorch to load the MNIST data.

- Input image size resized to 28x28.

- Used

CrossEntropyLoss()as the Loss function. - Optimizer:

optim.SGD(model.parameters(), lr=0.01, momentum=0.9) - Trained for 20 epochs.

Results

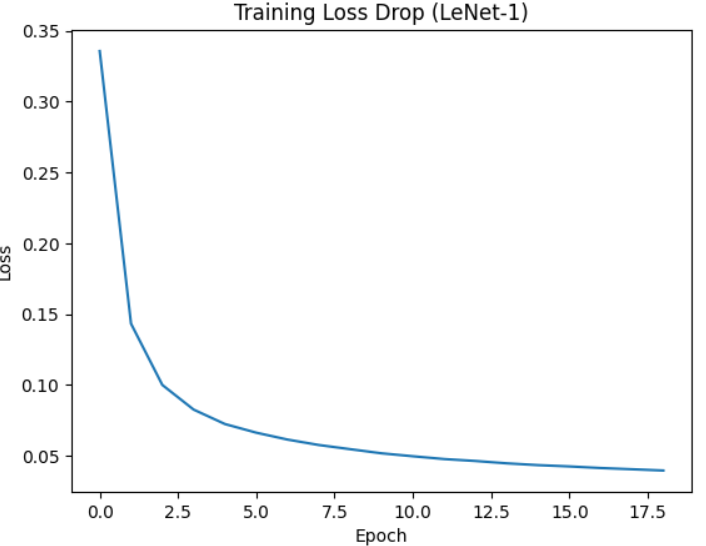

- Training Loss: 0.0397

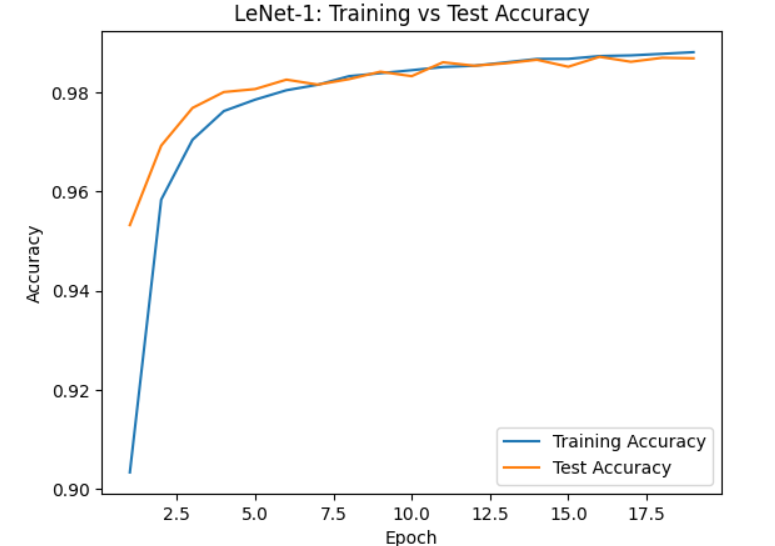

- Training Accuracy Rate: 98.80%

- Test Accuracy: 98.68%

- Test Error Rate: 1.32%

Visualizations

Observations

I noticed that I got a lower error rate for the test dataset (1.32%), although the same architecture and procedures are used. This is within the expected range for the network due to its small size and modern initialization techniques.

Conclusions

The results successfully reproduced the expected performance of LeNet-1.

Next Steps / Ideas

- Rerun the training using max pooling instead of average pooling.

- Try with

ReLUinstead ofTanh. - Add padding.

Comments